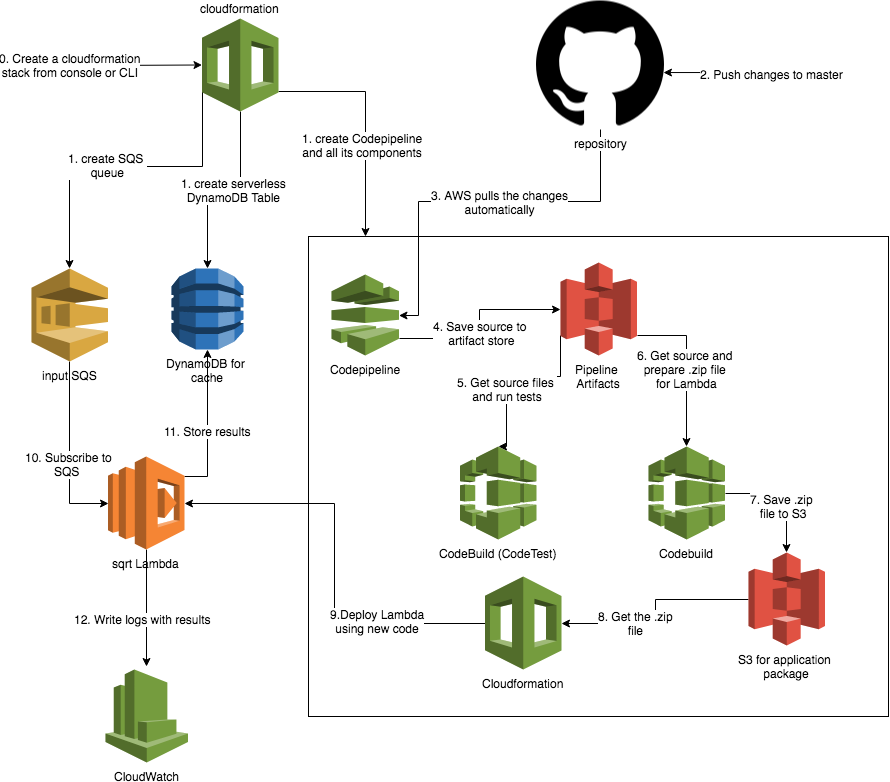

Continuous integration and continuous delivery are powerful practices that allow release software faster and of a higher quality. This post walks through steps to implement CI/CD pipeline for a small lambda function that calculates square roots by:

- getting message from SQS that contains the number to calculate

sqrtfor - checks if the calculation was done before by querying DynamoDB

- if there is not cached answer in DynamoDB - calculate

sqrtand saves the result - print the result so it’s visible in CloudWatch logs

Things I’d like the pipeline to do:

- create all the resources - SQS and Dynamo

- subscribe to any changes that are committed to master branch of GitHub repo

- run tests - I’m going to run unit tests, but since the resources are there you can run integration/end-to-end tests

- build the package for lambda with all python dependencies

- deploy the package

Pipeline architecture:

The initial CloudFormation template along with all the code can be found in this GitHub repo

The pipeline.yaml (see aws folder in the repo) contain the CloudFormation template that will create SQS, Dynamo, CodePipeline with all its steps:

- source step to get the source code form GitHub

- CodeTest (CodeBuild type) to run a container to run tests

- CodeBuild - a container that will prepare the build - a zip file on S3 Lambda can digest

- CodeDeploy - the step to deploy newly build Lambda.

The first thing to do is to create GitHub OAuth token - just follow steps 1-6 from this AWS doc.

Next, you need to create a stack from AWS console - Go to CloudFormation and click Create Stack. It will ask to fill in the stack parameters:

- name - a reference to the resources of the stack

- GitHub token, repo owner and repo name

Newly created pipeline appears in CodePipeline console right after that. If you open it there will be Source, CodeTest, CodeBuild and CodeDeploy stages present.

Also, all additional resources will be created:

- SQS queue that will feed the Lambda

- DynamoDB table with Pay-Per-Request billing

- S3 bucket for pipeline artifacts - it’s the mechanism to pass result of CodePipeline stages between each other

- S3 bucket that will hold zip file with packaged Lambda code

Source step of the pipeline is pretty autonomous. AWS will monitor the changes and start the execution of the pipeline once there was a push to the master branch. There is a limit on how many repositories it can monitor so the alternative is to implement a GitHub webhook that will trigger a special separate Lambda that in turn will start pipeline execution.

CodeTest is a step of CodeBuild type. It runs unit tests. Usually, the unit tests are run by developers individually by implementing pre-commit hooks on the local machine. But this step ensures that they were executed before push. Also, it can run the test for a higher level of the testing pyramid.

CodeBuild uses chalice package to do a couple of things

- create CloudFormation template to deploy lambda

- package Lambda code

- create Lambda policies

The important part of this stage is the image the container will use. Since some python packages are wrappers around C libraries, which are compiled when we run pip install, so the OS where we run pip install should be similar to the OS which will run the code and use these packages. I found this images amazonlinux:latest on DockerHub, which resembles Lambda runtime. Dependencies are installed into virtual environment. All the site-packages go to vendor folder.

- python3 -m venv v-env && . v-env/bin/activate && pip install --upgrade pip && pip install -r requirements/requirements.txt && deactivate

- mkdir vendor

- cp -R v-env/lib/python3.7/site-packages/. vendor

- cp -R v-env/lib64/python3.7/site-packages/. vendor

Next thing is the code - it should be placed in a vendor folder as per chalice docs. I don’t like to have it in my projects structures so I’m creating it in codebuild.yaml (which is referred in CodeTest as a buildspec - a script to run) and copying everything in it.

- cp -R my_package vendor

There is another difficulty - a Lambda IAM policy. Ideally, it should be as restrictive as possible, but grant access to the SQS and Dynamo we already created. In this stage I’m passing a number of different environment variables to the container:

- the ones that start with

LAMBDA_ENVgo into Lambda config to be available at its runtime - the ones starting with

POLICY_ENVare used to generate policy document (chalice policy generator is not yet good enough for that)

There is a config_generator.py script that read these variables and put them in proper places. LAMBDA_ENV will be put into a file .chalice/config.json and POLICY_ENV will go to .chalice/police.json - both will be used later by chalice to generate CloudFormation template.

Finally, the size of the build should be less than 265Mb. So delete extra files (boto3 is needed for build, but it’s available in Lambda runtime so no need to take it with us).

- echo 'Size of build' $(du -sm --exclude=./v-env .) 'MB'

- find . -name "*.pyc" -exec rm -f {} \;

- find ./vendor -name 'boto3' -prune -type d -exec rm -rf {} \;

- find ./vendor -name 'botocore' -prune -type d -exec rm -rf {} \;

- echo 'Size of build after cleaning' $(du -sm --exclude=./v-env .) 'MB'

The chalice package command, in the end, creates a zip file along with sam.json file. AWS CLI command will prepare the final template for CloudFormation - transformed.yaml - that will drive the deploy stage

- . v-env/bin/activate && python config_generator.py && chalice package /tmp/packaged && deactivate

- aws cloudformation package --template-file /tmp/packaged/sam.json --s3-bucket ${APP_S3_BUCKET} --output-template-file transformed.yaml

After all that all the changes to the master branch of your repo should be automatically tested, (ideally) integrated and deployed.

If you use Python in a serverless environment on AWS or use CI/CD for such applications, connect with me on LinkedIn

Code for this post is available here